(TippaPatt/Shutterstock)

AI may be driving the bus in terms of IT investments. However as corporations wrestle with their AI rollouts, they’re realizing that points with the information are what’s holding them again. That’s what’s main Databricks to make investments in core information engineering and operations capabilities, which manifested this week with the launch of its Lakeflow Designer and Lakebase merchandise this week at its Information + AI Summit.

Lakeflow, which Databricks launched one yr in the past at its 2024 convention, is basically an ETL instrument that allows clients to ingest information from totally different programs, together with databases, cloud sources, and enterprise apps, after which automate the deployment, operation, and monitoring of the information pipelines.

Whereas Lakeflow is nice for information engineers and different technical of us who know tips on how to code, it’s not essentially one thing that enterprise of us are snug utilizing. Databricks heard from its clients that they wished extra superior tooling that allowed them to construct information pipelines in a extra automated method, stated Joel Minnick, Databricks’ vice chairman of promoting.

“Clients are asking us fairly a bit ‘Why is there this selection between simplicity and enterprise focus or productionization? Why have they got to be various things?’” he stated. “And we stated as we type of checked out this, they don’t must be various things. And in order that’s what Lakeflow Designer is, having the ability to broaden the information engineering expertise all the best way into the non-technical enterprise analysts and provides them a visible option to construct pipelines.”

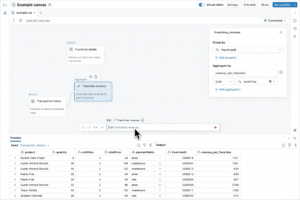

Databricks’ new Lakeflow Designer options GUI and NLP interfaces for information pipeline improvement

Lakeflow Designer is a no-code instrument that permits customers to create information pipelines in two other ways. First, they’ll use the graphical interface to pull and drop sources and locations for the information pipelines utilizing a directed acyclic graph (DAG). Alternatively, they’ll use pure language to inform the product the kind of information pipeline they wish to construct. In both case, Lakeflow Designer is using Databricks Assistant, the corporate’s LLM-powered copilot, to generate SQL to construct the precise information pipelines.

Information pipelines constructed by Lakeflow Designer are handled identically to information pipelines constructed within the conventional method. Each profit from the identical degree of safety, governance, and lineage monitoring that human-generated code would have. That’s because of the integration with Unity Catalog in Lakeflow Designer, Minnick stated.

“Behind the scenes, we discuss this being two totally different worlds,” he stated. “What’s taking place as you’re going by way of this course of, both dragging and dropping your self or simply asking assistant for what you want, is the whole lot is underpinned by Lakeflow itself. In order all that ANSI SQL is being generated for you as you’re going by way of this course of, all these connections within the Unity Catalog be sure that this has lineage, this has audibility, this has governance. That’s all being arrange for you.”

The pipelines created with Lakeflow Designer are extensible, so at any time, an information engineer can open up and work with the pipelines in a code-first interface. Conversely, any pipelines initially developed by an information engineer working in lower-level SQL may be modified utilizing the visible and NLP interfaces.

“At any time, in actual time, as you’re making modifications on both facet, these modifications within the code get mirrored in designer and modifications in designer get mirrored within the code,” Minnick stated. “And so this divide that’s been between these two groups is ready to utterly go away now.”

Lakeflow Designer can be getting into non-public preview quickly. Lakeflow itself, in the meantime, is now usually obtainable. The corporate additionally introduced new connectors for Google Analytics, ServiceNow, SQL Server, SharePoint, PostgreSQL, and SFTP.

Along with enhancing information integration and ETL–lengthy the bane of CIOs–Databricks is trying to transfer the ball ahead in one other conventional IT self-discipline: on-line transaction processing (OLTP).

Databricks has been centered totally on superior analytics and AI because it was based in 2013 by Apache Spark creator Matei Zaharia and others from the College of California AMPlab. However with the launch of Lakebase, it’s now stepping into the Postgres-based OLTP enterprise.

Lakebase is predicated on the open supply, serverless Postgres database developed by Neon, which Databricks acquired final month. As the corporate defined, the rise of agentic AI necessitated a dependable operational database to deal with and serve information.

“Each information software, agent, advice and automatic workflow wants quick, dependable information on the velocity and scale of AI brokers,” the corporate stated. “This additionally requires that operational and analytical programs converge to scale back latency between AI programs and to supply enterprises with present data to make real-time selections.”

Databricks stated that, sooner or later, 90% of databases can be created by brokers. The databases spun up in an on-demand foundation by Databricks AI brokers can be Lakebase, which the corporate says will have the ability to launched in lower than a second.

It’s all about bridging the worlds of AI, analytics, and operations, stated Ali Ghodsi, Co-founder and CEO of Databricks.

“We’ve spent the previous few years serving to enterprises construct AI apps and brokers that may motive on their proprietary information with the Databricks Information Intelligence Platform,” Ghodsi said. “Now, with Lakebase, we’re creating a brand new class within the database market: a contemporary Postgres database, deeply built-in with the lakehouse and right now’s improvement stacks.”

Lakebase is in public preview now. You possibly can learn extra about it at a Databricks weblog.

Associated Gadgets:

Databricks Needs to Take the Ache Out of Constructing, Deploying AI Brokers with Bricks

Databricks Nabs Neon to Resolve AI Database Bottleneck

Databricks Unveils LakeFlow: A Unified and Clever Device for Information Engineering